Implementing Cloth Simulation in WebGL

The PlayCanvas WebGL game engine integrates with ammo.js - a JavaScript/WebAssembly port of the powerful Bullet physics engine - to enable rigid body physics simulation. We have recently been working out how to extend PlayCanvas’ capabilities by using soft body simulation. The aim is to allow developers to easily set up characters to use soft body dynamics.

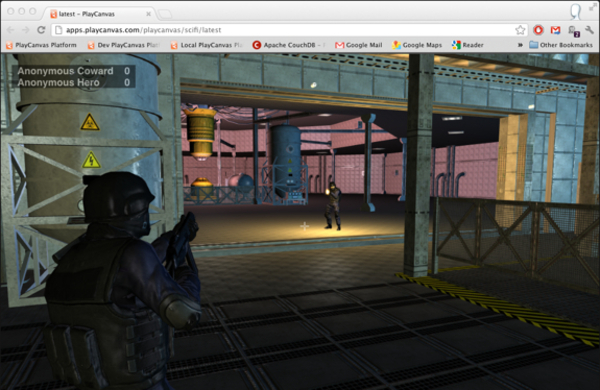

Here is an example of a character with and without soft body cloth simulation running in PlayCanvas: